A6: How Apple's custom silicon and iOS optimized each other

After delivering just its first two generations of its ARM processors, Apple was already recognized as a leading mobile silicon designer. Its next moves surprised the industry and began to reveal a strategy of tight vertical integration from silicon to OS that other device makers couldn't match—and didn't even seem to see as important until it was too late.

The Swift before Swift

By the middle of 2012, Apple had launched A4, A5 and a new A5X with a custom wide memory and graphics architecture powering "The New iPad," its third generation tablet with Retina display.

At the time, Apple's future for Ax mobile silicon was commonly seen to be effectively tied with Texas Instruments' OMAP 5, Nvidia's Tegra 4, and Samsung's Exynos 5 in an industry-wide race to deliver a new generation of mobile chips based on ARM's new Cortex A15 big.LITTLE design.

ARM's reference design paired sets of multiple fast and slow cores together to balance speed and power efficiency. Qualcomm was also just releasing its own new multiple-core Krait Snapdragon architecture implementing similar techniques.

That fall, Apple released its A6, which again doubled both its CPU and GPU performance over the previous year. Writing for Anandtech, Anand Lal Shimpi stated that "it looks like Apple has integrated two ARM Cortex A15 cores on Samsung's 32nm LP HK+MG process," adding that "this is a huge deal because it means Apple beat both TI and Samsung on bringing A15s to market," calling the Cortex A15 "the highest-performance licensable processor the industry has ever seen."

It was actually a bigger deal than even that. Rather than being first to deliver an accelerated version of a new ARM-licensed core, Apple had created an entirely custom new "Swift" CPU design for its dual-core A6. Swift was faster than anything anyone could license from ARM.

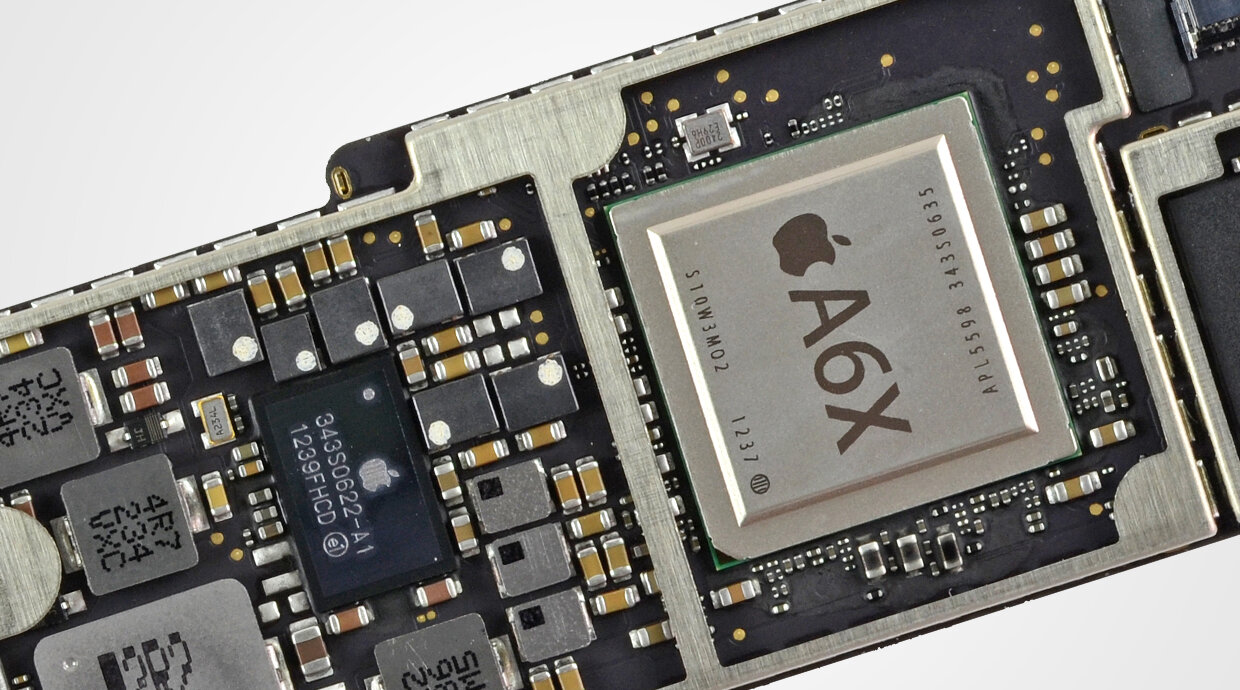

A6 with two Swift CPU cores

Apple's purpose-built silicon

Additionally, Apple's new Swift core didn't even follow ARM's supposedly state-of-the-art big.LITTLE many-core design concept specifying the use of sets of asymmetrical cores. Instead, A6 used two relatively big CPU cores that dominated its design.

As the world's most commercially significant mobile app platform, Apple had a unique insight into how to best accelerate real-world code running on a mobile device. The chip architects at ARM weren't running a leading App Store. Neither it nor Qualcomm were developing an operating system.

ARM's high-performance core designs appear to have been originally built with servers in mind. Just a few years prior, nobody had expected mobile devices to rapidly jump upward in sophistication so quickly, paid for by profitable unit sales of huge volumes of new devices that could sustain such a pace of silicon development.

Apple had just created an entirely new, viable category of tablet device in 2010. It had initially used the same chip for its iPad and iPhones for two years before releasing the A5X specifically for higher-powered iPad 3 graphics. Meanwhile, chip designers at other firms had been looking to expand into new embedded markets rather than seeking to double the performance of chips for a tablet market that didn't even exist.

So while Apple may have appeared to be only catching up with other mobile chipmakers in 2012, the reality was that Apple's first entirely custom core design—built as the company's iOS strategy began to shift from a hopeful expectation to an established success—hadn't even dropped yet.

Apple's unconventional A6 cores

ARM's big.LITTLE was invented as a way to use multiple, high-performance parallel cores in a mobile device: it allowed an option to turn them on when necessary and switch use low power cores when they were not. This required complex cache coherency between the sets of cores to work effectively. The two sets of cores essentially acted like two different processors, which the system could switch between, resulting in lots of duplication on the SoC.

ARM's big.LITLLE switched between sets of fast Cortex-A15 and efficient Cortex-A7 cores

The many-core architecture wasn't optimized for typical use cases on mobile devices in 2012. On a phone, a device is often sitting idle, but when the user wants to do something, it needs to light up and focus on the task at hand, then shut off again just as rapidly to conserve battery.

That's entirely different from a server, where battery life isn't an issue and multiple cores can constantly process lots of independent tasks at once. The primary way to make big.LITTLE look great on a mobile device was to run a server-like task on it, which is exactly what a multicore benchmark looks like. But in the real world, users were rarely trying to run sustained workloads that could effectively use multiple cores at once.

That appears to be why Apple focused on using more of its silicon to deliver two larger cores, which could ramp up quickly, blaze through a single core task rapidly, and then quickly scale back down to idle. Fewer, larger cores dedicated more silicon real estate to the typical use case of a mobile device: blazing through a single task rapidly—primarily to drive a responsive user interface.

Packing four or more "performance cores" into the same area, the Cortex A15 design effectively scaled down the size of its largest engine to make room for the possibility that a circumstance might occur where four smaller engines could be working together in tandem. Apple's A6 appeared unusual for having two larger CPU engines.

Apple's unique silicon feedback loop

Apple understood better than any other silicon design team exactly what made every facet of iPhones scream. Its silicon designs could custom-deliver exactly what the OS and mobile apps needed to feel fast while running efficiently. In particular, Apple cared more about a "sprint" performance delivering a responsive user interface, while rivals seemed to largely be aiming to deliver benchmarkable, sustained CPU performance in the model of a PC.

Google wasn't building optimized Androids; it was building the most generic, multi-purpose platform that could possibly exist, in the hopes that everyone would use Android because it could run on essentially any CPU and GPU architecture. Google wasn't even using hardware-accelerated encryption and was pushing "free" media codecs rather than state-of-the-art hardware-optimized codecs that required licensing.

Samsung, the only other company that—like Apple—similarly had teams building both phones and silicon chips, was building generic hardware and software that could run on a Qualcomm Snapdragon as well as its own Exynos, or whatever other chip might be cheap and good enough to use. Even in its own chips, it had largely just followed the generic designs created by ARM, for both CPUs and in graphics with ARM's Mali GPU. Samsung was also selling Exynos chips to other companies, meaning that its designs needed to generally please broad markets, where price is a very critical factor. Samsung's first custom SoC core wasn't delivered until 2015, a point where Apple was hopelessly ahead.

Apple had been optimizing iOS from the kernel to the user interface for one silicon architecture. Additionally, the needs of Apple's OS and app-level developers could be directly fed to the teams designing their future generations of silicon. Since Apple was the only user of its Ax chips, it could design for proprietary, premium devices where cost wasn't the most important factor. Apple wasn't just benefiting from silicon economies of scale by producing its own chips, it was also radically enhancing the meritocratic feedback loop that improves products in a market.

Apple only built A6 for its own use: iPhone 5

Integration at Apple between various hardware and software teams was resulting in a vastly optimized, premium product. The lack of integration between Android phone makers, Android OS development, and the various chip makers supplying them resulted in a poorly optimized, basic product on many levels. At the same time, Apple's competitors were forced to build phones that packed on far more RAM and clocked their chips higher just to appear competitive.

Because iOS could perform well at lower clock speeds and required less RAM, it could also deliver better battery life. In fact, Apple's silicon was being refined and optimized so rapidly that its comfortable performance lead allowed the company to take on even more specialized tasks. Apple's silicon design team developed its own storage controllers, built custom audio logic, designed its own image signal processor expertise, and ultimately even its own GPU architecture.

Everything Apple added to its Ax chips was also benefitting from vast economies of scale because Apple could bank on profitably selling well over 100 million units of its best chips in their first year of availability. Apple's performance-oriented silicon competitors TI, Nvidia, and Intel could not. Within just the first three years of Apple's Ax developments, it was becoming clear that this wasn't going to change, either.

At the same time, Apple's mobile devices were starting to disrupt other product categories, supporting further growth in sales volumes that continued to drive advancements in silicon, as the next segment will examine.