ARM to A4: How Apple changed the climate in mobile silicon

The history of the first four decades of PCs has largely been defined in terms of OS: Apple's early lead with the Mac was taken over by Microsoft's Windows, which then lost ground to Google's Android. But this narrative fails to account for an even more powerful force than the middleware software platform: low-level hardware, and specifically the largest historical driver of technical progress in the industry: the silicon microprocessor.

Across the last decade of the 2010s, Microsoft and Google have embraced a variety of mobile processors including the Qualcomm Snapdragon, Nvidia Tegra, Texas Instruments OMAP, Samsung Exynos, and Intel Atom in various attempts to seed Windows and Android mobile devices as broadly as possible.

Rather than scattering its investments across a wide range of silicon architectures, Apple's mobile strategy focused on only one silicon chip designer, resulting in vast economies of scale benefiting one company. This has dramatically shifted the industry.

However, Apple's recent development of mobile SoCs from A4 through its latest A13 Bionic was not the company's first foray into custom processors. In fact, earlier efforts decades ago-- that did not appear to be very successful at the time-- helped to align the planets for Apple's modern moves. Here's how it all started.

Something slightly magical happens when you set goals that feel a bit crazy

Last month, Apple's chief executive Tim Cook delivered a speech at the 30th anniversary gala for Ceres, a non-profit promoting sustainable energy.

Cook made a comment specifically describing Apple's recent sustainability efforts, but it could also be applied to the radical, ambitious shift Apple set in motion when it decided that in order to build the future of mobile devices, it really needed to custom design its own silicon chips.

"I have found that something slightly magical happens when you set goals that feel a bit crazy," Cook said. "The effort will take you to places you didn't anticipate, but the results are almost always better than what you thought was possible at the outset."

People who are really serious about software

When Steve Jobs launched iPhone in 2007, he cited the famous quote, "people who are really serious about software should make their own hardware," originally stated by Xerox PARC alum Alan Kay way back in 1982. It reflected, in a sense, another line attributed to Kay from a decade earlier, that "the best way to predict the future is to invent it."

Kay's early 1980's work had a significant influence on Apple, which at the time was developing mainstream consumer hardware capable of delivering graphical desktop concepts that had been percolating at PARC in a commercially viable way.

Xerox had invested in Apple to help deliver its advanced computing concepts in a successful consumer product. Rather than being a vastly expensive, manually-assembled workstation created without any cost constraints for researchers, the 1984 Macintosh had to be mass-produced and affordable. That led Apple to use Motorola's 68000 microprocessor.

Apple's Macintosh not only implemented Xerox UI concepts, it developed an affordable hardware platform | Source: iFixit

The Mac originally sold in volumes of just a couple hundred thousand units, much lower than Apple initially imagined. That left the company entirely dependent upon Motorola to develop and advance new generations of the 68000 chips it used, and put Apple in direct competition with everyone else using Motorola's silicon.

However, as Apple entered its a profitable Golden Age, it increasingly became free to pursue ambitious ideas including a future of mobile tablets and vastly more powerful new workstations, both powered by the newly emerging development of RISC processors. Apple decided early on that it should design its own custom processors.

Apple's wild ambition takes on a lot of RISCs

Two RISC chip projects had just gotten started in Apple's Bay Area backyard, Stanford University's MIPS and the University of California, Berkeley's RISC, later commercialized by Sun as SPARC. IBM was working on what would become POWER, HP had PA-RISC, and DEC would later develop Alpha. All of these projects pursued a new approach to microprocessor design that threatened to make existing CISC chips obsolete-- including Intel's x86 used in DOS PCs and Motorola's 68000 used in Macs.

In 1986, a power struggle within Apple developed between a young Steve Jobs-- who wanted to aggressively promote the new Mac as a powerful platform for business and education users-- and the chief executive he had recruited to run Apple, John Sculley-- who ultimately convinced the board to remove Jobs as Apple's chairman and pursue a more conservative strategy of extending more profitable Apple II sales while waiting for the Mac to prove itself.

That year, Jobs left Apple to develop his ambitious ideas externally at a new startup, NeXT. Sculley's Apple made incremental enhancements to its existing computers, including a new 16-bit Apple IIGS and new more PC-like Mac II models driven by successive generations of ever-faster Motorola 680x0 chips. At the same time, Sculley also launched a more forward-thinking Advanced Technology Group tasked with thinking about the possibilities of the distant future.

Across its first four years, Apple's ATG set to work developing its own Scorpius RISC architecture, a design using multiple processor cores and an early implementation of SIMD parallel processing to rapidly handle repetitive tasks such as graphics. Apple intended to use Scorpius to develop an Antares CPU powering its next-generation Aquarius computer, but these all remained mythical folklore despite massive millions in top-secret funding by Apple.

Beyond ATG, Apple's mid-80's profits-- shielded from any real competition in graphical computing-- also allowed the company to leisurely throw millions of dollars at Hobbit, another RISC chip originating at Berkeley and being developed by AT&T.

Hobbit chips were expected to be powerful enough to run handwritten recognition and other pen-based computing concepts imagined to be important in the early 90s. However, AT&T consistently struggled to deliver functional chips, according to "Mobile Unleashed," a SemiWiki history of the evolution of ARM processors, written by Daniel Nenni and Don Dingee.

Open Apple Shift

As Apple became bogged down in vastly complex and extraordinarily expensive futuristic research projects, it was becoming increasingly obvious that the key to commercial success required delivering incremental, tangible progress. Apple's failure to develop or fund a practical RISC processor on its own forced it to look at existing alternatives it could adapt for its own uses.

While Apple was blowing millions of dollars funding complex, futuristic RISC concepts that ultimately went nowhere, the much smaller British PC maker Acorn set out to develop its own cheaper alternative to Motorola's 68000 on a shoestring budget. The result was a simple, elegant RISC processor it began using to power its proprietary 32-bit Archimedes PCs in the late 80s.

First generation ARM chip

Acorn had hoped to share the expense of developing its chip by selling its ARM chips for use in other companies' business computers in the model of Intel's x86 or Motorola's 68000. But there simply wasn't much interest in another new PC chip platform incompatible with existing processors. Outside of PCs, however, emerging interest in battery-powered portable systems requiring lower power consumption had prompted ARM to implement a new static design that allowed the CPU clock to be slowed down or even paused when idle, without losing data.

Acorn was growing increasingly concerned about funding its functional ARM chip design that nobody else was using. Apple was worried about its continued funding for AT&T's Hobbit chip that it needed, but remained dysfunctional. VLSI, the chip fab both companies were using, suggested the two discuss a partnership.

Acorn's chip was about as powerful as the Mac's original Motorola 68000 processor, but It required far fewer transistors due to its clean, efficient, and modern RISC architecture. Apple determined that the "Acorn RISC Machine" could be adapted for efficient use in the new generation of Newton mobile devices it was designing. In 1990, the three companies set up a join venture and recruited former Motorola executive Robin Saxby to run it, resulting in Advanced RISC Machines Ltd.

Openly license everything

Neither Apple nor Acorn had a strong sense of what ARM should be for anyone else outside of their own needs, but Saxby had the vision of turning the ARM architecture into a global embedded standard anyone could adopt, sort of a UNIX for silicon. Saxby had earlier written a business plan that proposed Motorola spin off its microprocessor operations into a design service for third parties. Motorola passed on the idea, so Saxby effectively took the concept to the new ARM.

ARM first set to work building incremental chip enhancements for Acorn's PCs and Apple's Newton tablet. After proving itself as a capable architecture, ARM licensed its CPU core to Texas Instruments, which put an ARM CPU core next to its own DSP core to provide a baseband "System on a Chip" capable of powering the newly emerging generation of 2G GSM phones led by Nokia.

Nokia's iconic 8110 banana phone from "The Matrix" was the first to use an ARM chip

At DEC, Alpha RISC chip designer Dan Dobberpuhl set up a design group to merge the performance of Alpha with the power efficiency of ARM, resulting in the StrongARM architecture that powered the second generation of Apple's Newtons.

The apparent success of openly licensing technology had earlier prompted Apple to license its Newton platform to other hardware makers, including Motorola and Siemens. Cirrus Logic licensed the ARM design to develop "Newton compatible" chipsets that Apple's licensees could use to build their own mobile devices. Apple also began licensing the classic Mac OS to third-party hardware makers, including Bandai's Pippin game system.

Apple's Newton and Mac OS licensing programs didn't expand the company's sales

The emerging idea that "open always wins" also prompted 3DO to build a home video gaming platform that used an ARM processor paired with an audio DSP and specialized graphics accelerator hardware, which like Apple's Newton could be licensed to hardware manufacturers as a competitive alternative to the proprietary game consoles developed by Sony and Nintendo.

In parallel, Apple also began collaborating with IBM and Motorola to scale down IBM's server-class, 64-bit POWER architecture for use in desktop computers, under the name PowerPC, with the goal of allowing Apple to upgrade the Mac into the performance league of powerful Unix workstations that were already using advanced RISC processors such as the DEC Alpha, Silicon Graphics' MIPS, and Sun's SPARC.

The AIM Alliance actually intended PowerPC to deliver an openly licensed "Common Hardware Reference Platform" that intended to replace Intel's x86 PC architecture and be capable of running essentially any OS: Apple's Mac OS, IBM's AIX UNIX and OS/2, Sun Solaris, BeOS, NeXTSTEP, Microsoft Windows NT, as well as modern new operating systems being jointly developed by Apple and IBM.

Open didn't always win

However, while history selectively remembers Microsoft's wild success in licensing Windows software, it conveniently forgets about a series of licensing programs that effectively destroyed their makers. Despite 3DO winning CES awards and lining up a huge roster of licensees including Panasonic, LG, AT&T and Samsung-- the more sophisticated Sony PlayStation achieved sales volumes that simply crushed 3DO.

For Apple, OS licensing did little to expand the market share of its Mac or Newton platforms. Instead, Apple's specialized licensees largely skimmed the cream off of Apple's addressable markets, leaving the company to service higher volume but lower profit entry-level Mac sales.

Even Microsoft's parallel efforts to deliver its own broadly licensed Windows CE mobile platform for "handheld computing," despite being capable of running across a variety of mobile chips including ARM, MIPS, PowerPC, Hitachi SuperH, and Intel's x86, failed to find any real success, despite lining up many hardware licensees. It certainly never replicated anything close to the success Windows had experienced on PCs.

Microsoft's Windows CE didn't accomplish much because of low sales, despite lots of licensing

At the same time, popular history also selectively remembers Intel's x86 as being the most important chip family of the modern PC era. In reality, the ARM architecture grew dramatically across a wide variety of embedded applications where there was no need for x86 compatibility. Across its various licensees, ARM chips were collectively selling in much larger volumes than x86 processors. The openly licensed nature of PowerPC, however didn't have the same success.

Across the rest of the 1990s both of Apple's silicon efforts came to be regarded as flops, hopelessly associated with Apple's own increasing beleaguerment. ARM was only marginally successful at Apple as sales of its Newton Message Pads and eMates slowly continued, and PowerPC failed to gain traction outside of Apple's Macs, leaving the company in the impossibly difficult position of trying to keep up with the economies of scale that Windows PCs were enjoying with Intel x86 chips.

The silver lining of Apple's two apparent silicon flops

However, interesting developments later grew from both of Apple's efforts in custom silicon. The openly-licensed ARM architecture resulted in a far more energy-efficient mobile CPU than Intel's proprietary x86 could, making it useful in embedded applications and phones popularized by Nokia and others. To work in phones, ARM scaled down its 32-bit instruction set to the 16-bit "Thumb," allowing handset makers to skate by with less RAM.

The ultra energy-efficient design that Apple set out to create for its new handheld Newtons was "a bit crazy" in the early 1990s, but it turned out to be incredibly important in the 2000s, when all-day battery mobility rapidly became more valuable than an overclocked hot x86 PC box with howling fans sitting under a desk.

By 2000, there were lots of chipmakers using ARM's licensed designs, and several firms had obtained architectural licenses that allowed them to custom-optimize their own ARM-compatible CPU cores, including Texas Instruments and XScale-- the renamed StrongARM group Intel had taken over in 1997.

Apple's involvement in founding ARM also resulted in stock holdings that Steve Jobs could sell off for over $1.1 billion between 1998 and 2003, helping to hide the severity of Apple's financial situation while giving the company time to execute its strategies to modernize the Mac and deliver iPod.

The 2001 iPod, somewhat ironically, marked Apple's return to using ARM processors. Fabless chip design firm PortalPlayer had developed a ready-to-use media player SoC incorporating two generic ARM cores that Apple could use "off the shelf" to deliver the new product.

2001 iPod, with Portal Player chipset | Source: iFixit

Similarly, the clean, modern PowerPC architecture was also picked up by a variety of firms doing specialized work that Intel's x86 was not really optimal at handling. That included the year-2000 partnership between Sony, Toshiba, and IBM to build the Cell processor used in Sony's Playstation 3; a subsequent 2003 partnership between IBM and Microsoft to develop a related XCPU chip powering Xbox 360, and the mid 2000s founding of TI-backed fabless chip designer P.A. Semi, where another team led by Dobberpuhl developed a new super-fast, ultra-energy-efficient PWRficient PA6T architecture designed for embedded uses, including signal processing, imaging processing, and storage arrays.

Prior to these developments, PowerPC had initially been expected to deliver ultra-fast chips powering desktop PCs in direct competition to Intel's x86. But across the 1990s, Microsoft and Motorola dropped the ball in getting Windows to work well on PowerPC. Other parallel failures to port existing desktop platforms to PowerPC meant that Apple ended up the only significant PC maker capable of running on PowerPC.

In the mid-90s, startup Exponential Technology set out to develop an even faster version of the PowerPC architecture than IBM and Motorola were delivering, resulting in the x704, a chip Exponential hoped to sell to Apple to power its Macs. Exponential's x704 chipmerged bipolar transistors and CMOS memory on a single chip, with customized silicon design optimization tweaks that enabled it to run at 533MHz-- much faster than Motorola's second generation, 200 to 300MHz PowerPC 604e chips that were powering Apple's Power Macs in 1997.

Exponential Technology's x704 was once hailed as the Mac's savior

Motorola convinced Apple that Exponential couldn't deliver its faster chips reliably enough, limiting Exponential to selling its ultra-fast chips to smaller Mac cloners. Motorola's next generation of new PowerPC 3G chips-- and Jobs' move to kill Mac clone licensing-- erased the future of Exponential's business.

However, Exponential engineers took their custom chip-accelerating techniques to a new firm, later named Intrinsity. That firm developed a portfolio of technologies it called "Fast14," which it demonstrated could accelerate a variety of existing chip architectures to "FastCore" versions. However, those tweaks were also viewed as expensive and time-consuming to deliver and needed to be redone with every new process node.

Chip designers commonly relied on ever-smaller process nodes to deliver much of their speed benefits for "free," simply by shrinking down the chip design. As a result, Intrinsity's idea of custom tweaking the logic layout for each process node was not enthusiastically adopted.

In 2007, Intrinsity worked with ARM to apply Fast14 to the Cortex-R4 core, but ARM didn't pursue further work with the firm. ARM even reportedly advised Samsung against working with Intrinsity to "Fast14" the Cortex-A8 core, under the assumption that it wouldn't achieve better results than Texas Instruments' internally-customized OMAP 3 had.

A 2010 review of the firm's technology by AnandTech detailed that the startup had burned through $100 million in funding over its first ten years, and still hadn't been able to attract much attention or become profitable. Investors were losing interest in throwing more money at what appeared to be an impractical set of technologies.

Ripe for the picking by Apple

Unlike the initial iPods with hardware developed by PortalPlayer, Apple's first three iPhone models used ARM SoCs that were "customized" in the sense that Apple special ordered features such as a more powerful than typical GPU and vastly more RAM than most other smartphones of the time.

Unlike existing phones, Apple's iPhone supplied a much more powerful Application Processor. It paired an ARM CPU core with Power VR graphics, connected to 128MB of RAM, making it capable of running a stripped-down version of Apple's desktop Mac OS X. The new iPhone also incorporated a separate Baseband Processor, which paired an ARM CPU with a 2G DSP in a separate package built by Infineon. This independently ran its own OS, handling mobile network traffic and communicating with the Application Processor the same way a modem connects to a conventional PC.

The next year, iPhone 3G used the same AP, scaled down to a new process node, and a new 3G Baseband Processor modem with GPS. The next year's iPhone 3GS used essentially the same modem but a faster AP. To remain competitive, it was clear Apple would need to achieve a much faster cadence in new chip speed and capability.

Prior to A4, Apple special-ordered three generations of Samsung-built SoCs

There were a few custom-accelerated ARM Application Processor designs in the pipeline that Apple could soon buy, including Qualcomm's new Snapdragon Scorpion-- a chip that reportedly taken four years and cost $300 million to deliver. It paired Qualcomm's custom ARM CPU with the firm's Adreno GPU and integrated a CDMA/GSM modem all into a single package.

Texas Instruments was also preparing its OMAP 3 using PowerVR graphics, a chip family that had been powering Nokia's most advanced "internet tablet" mobile devices. Nvidia was also aiming to deliver Tegra, a new ARM SoC incorporating a design it acquired from PortalPlayer paired with a scaled-down version of its desktop GPU.

Intel was also rushing into the mobile market to deliver Silverthorne, a scaled-down version of the x86-- later branded as Atom-- that was rumored to have been prepared for Apple by Intel after the chip giant realized what a mistake it had been to refuse to build chips for iPhone before it arrived.

Intel had just recently won Apple's Mac business in the 2006 transition from PowerPC to x86, and it was broadly assumed that Intel would also fuel Apple's silicon needs in other areas. Intel's own ARM business, XScale, was sold off to Marvell just as Apple began to dramatically expand its appetite for ARM chips. With rumors swirling that Apple had plans for a tablet, Intel wanted to seal a deal for Atom.

Yet after years of relying on Motorola and IBM to deliver PowerPC chips that failed to materialize, then running into the same issue with PortalPlayer, followed by Intel's refusal to build iPhone chips, it's easy to see why Apple wanted to own its future supply of mobile chips. In fact, Apple decided early on that it needed to radically accelerate the development of a new processor for future iPhones and its upcoming iPad.

Apple actually launched this effort in parallel with unveiling the App Store. In retrospect, it's funny that outside observers were stating at the time that Apple wasn't moving quickly enough, that it should have opened the App Store a year earlier, that it couldn't afford to delay its macOS release by even a few months, or that it really should be scrambling to get Sun Java or Adobe Flash working on iPhone, or working on some other priority such as MMS picture messaging.

In early 2008, Apple acquired P.A. Semi and worked with Intrinsity and Samsung to develop what would eventually become the A4. It launched this ambitious project as iPhone was just barely proving itself, in an extremely competitive market full of entrenched mobile rivals-- and now attracting the attention of new entrants who were also larger and better funded than Apple.

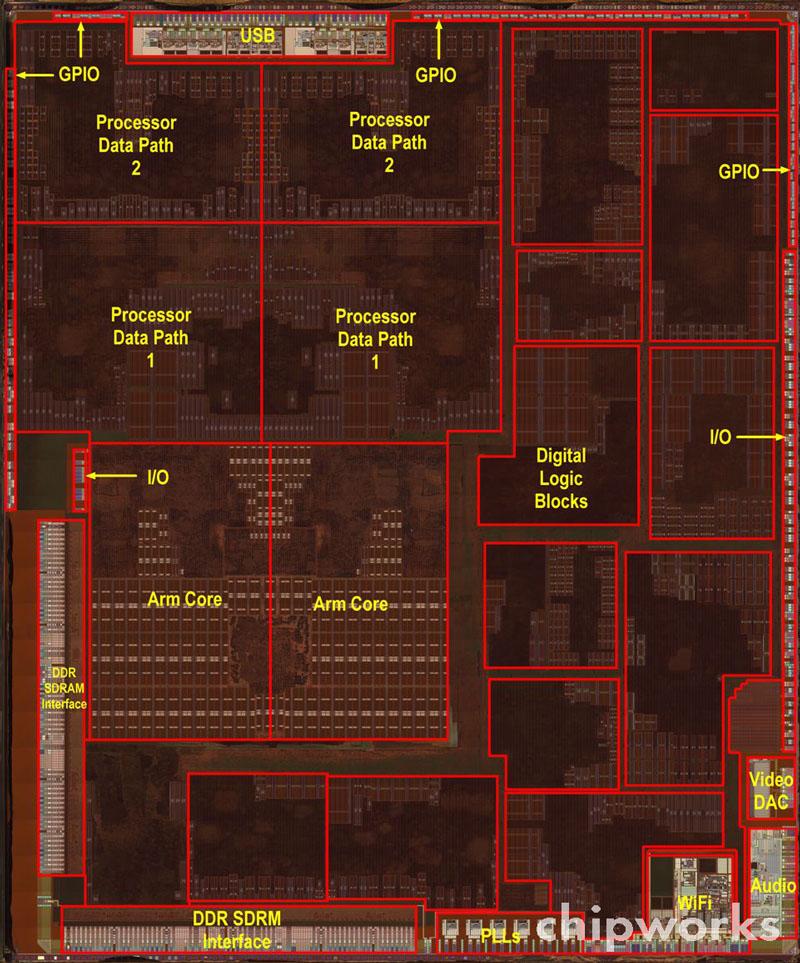

Apple's A4 and Samsung's S5PC110 Hummingbird shared significant design work, but were not identical

"It is believed in industry circles," AnandTech wrote in 2010, "that Samsung asked Intrinsity develop a FastCore version of the Cortex-A8 at the behest of Apple for A4, and also ended up using it for the S5PC110 / S5PV210 [Exynos 3] after splitting the cost."

It would take years to develop and all-new processor. But the timing for Apple to assemble a silicon design team and develop its own ARM CPU-- leveraging the silicon design savvy of a new generation of modern chip designers-- proved to be ideal, in part because by 2009, Intrinsity was in dire financial straits and appeared pretty desperate for a buyer to swoop in and save its engineering talent pool.

In addition, while Intel was actively drumming up support for its x86 Atom in mobile devices, AMD had won two x86-based CPU orders for the new Sony PS4 and Microsoft Xbox One consoles, ending the production of IBM's Cell and XCPU designs. That provided Apple an opportunity to hire away world-class chip design talent from IBM.

Apple secrecy behind the scenes

In 2009, Samsung publicly announced its Hummingbird partnership with Intrinsity, which it described as applying Fast14 techniques to ARM's generic Cortex-A8 CPU core to deliver a chip capable of accelerating ARM's generic core from its intended 650MHz up to 1GHz.

The EETimes cited Bob Russo, Intrinsity's chief executive as saying, "we'll probably have the fastest [Cortex-A8] part manufactured to date with the best megahertz per Watt and the lowest leakage." Russo also stated, "we did [Hummingbird] in 12 months for a small fraction" of the reportedly $300 million cost of Qualcomm's Snapdragon work.

Yet despite working closely with Intrinsity and being fully aware of the potential of its technology, it was not Samsung but Apple that decided to acquire the company in 2010. While public reports made no mention of Apple's involvement until Jobs announced A4 at the iPad launch in 2010, this omission was clearly intentional. Apple had similarly operated in secrecy to develop A4's mobile graphics.

In 2008, AppleInsider exclusively reported that Imagination Technologies had announced a deal to license its "next generation graphics and video IP cores to an international electronics systems company under a multi-use licensing agreement," and then later named Samsung as having only a manufacturing license for those technologies. That meant Apple was customizing the PowerVR GPU that Samsung was only building.

While Apple and Samsung apparently split the costs of developing the Hummingbird/A4 chips, Apple's 2010 acquisition of Intrinsity-- announced right before iPad launched-- meant that Apple gained exclusive access to the technologies that advanced its CPU speed beyond the ARM reference design. However, Apple didn't have exclusive access to PowerVR graphics; anyone could license that GPU.

It's telling that Samsung didn't.

It's also interesting that multiple contemporary reports portrayed Apple as a desperate, befuddled company that was acquiring chip design companies but then losing "most of" the employees of its acquisitions to its competitors. Reports by Anandtech and others echoed the idea that "most of the P.A. Semi engineers have since moved on from Apple to work in a startup named Agnilux (which was recently acquired by Google)."

That report also concluded, "All in all, it can't be said that Apple's acquisition of Intrinsity is a slam dunk."

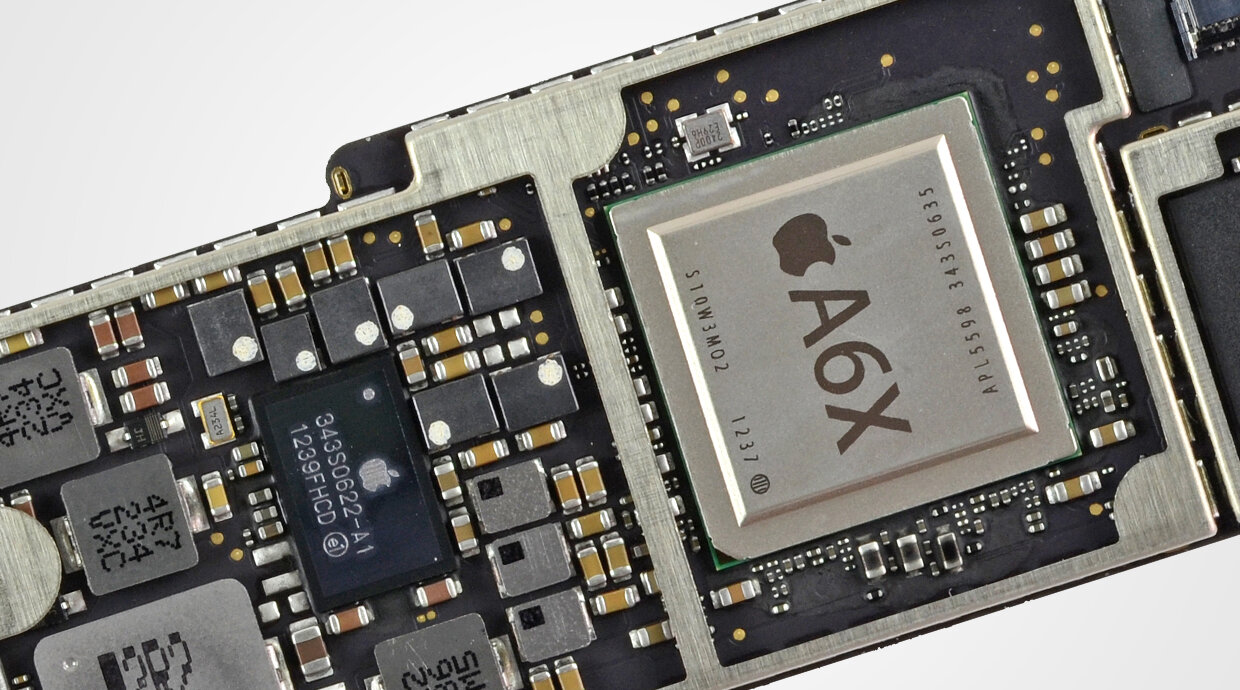

Apple doesn't detail the A4

"We have a chip called A4," Jobs said on stage at the original iPad event, "which is our most advanced chip we've ever done, that powers the iPad. It's got the processor, the graphics, the I/O, the memory controller-- everything in this one chip, and it screams."

Apple provided little real detail about the A4, however. Jon Stokes of Ars Technica wrote at the time that its design "isn't anything to write home about," and apparently assumed that if the new iPad didn't have a camera, the A4 wouldn't have an Image Signal Processor and therefore wouldn't be suitable for powering a smartphone.

But rather than being a wild, speculative moonshot stab at just delivering a tablet chip, A4 had been a meticulously planned strategy for producing a high volume chip capable of powering multiple devices, including a Retina Display iPhone 4 capable of FaceTime videoconferencing, and an all-new iOS-based Apple TV by the end of that year. That wasn't obvious until the strategy played out.

Apple also only later revealed that its A4 project had actually begun in 2008. It was led by Johny Srouji, who had "held senior positions at Intel and IBM in the area of processor development and design." Srouji had been recruited away from working on POWER7 at IBM by Apple's then-hardware chief Bob Mansfield, specifically to lead the development of the A4.

Attracting top talent would require an ambitious and significant project. The idea that A4 was a Samsung chip that Apple merely vanity branded strains credulity--it's part of a broad narrative that likes to suggest that Apple is just a fake marketing company that "can't innovate," an incredible media delusion that persists even today, fueled by absolutely clownish reporting led by Bloomberg and the Wall Street Journal.

Apple's 2008 acquisition of P.A. Semi actually brought around 150 new employees into Apple's existing silicon engineering team of around 40. Some of the principal members of P.A. Semi did leave, creating the Agnilux startup that Google did acquire. But that only involved a team of around ten people, so the entire narrative that Apple was buying up companies and all the talent was leaving to join Google or run somewhere else is pure nonsense.

Dobberpuhl, who had founded P.A. Semi five years before Apple acquired it, did later retire from Apple just before A4 was delivered. Dobberpuhl was famous for having developed the original DEC Alpha, as well as the design center at DEC that licensed ARM and developed the StrongARM chips Apple used in the last Newtons. He'd also led the development of a MIPS SoC, contributing to his already legendary status in the semiconductor industry. But when Apple acquired P.A. Semi, Dobberpuhl was already in his 60s. The media fantasy that he was snubbing Apple and leaving to build entirely new generations of chips for Google that would make Android untouchable was absurd.

All these years later, the custom silicon that Google was imagined to be inventing for its Nexus and Chromebooks and Pixels simply never materialized, while Apple has turned into a leading chip design firm with the largest installed base of mobile devices using state-of-the-art silicon.

Apple's vast sales of mobile devices have enabled it to rapidly launch advanced new technologies ranging from Metal graphics to computational photography to mainstream augmented reality to the TrueDepth sensors powering Face ID and Portrait Lighting. The next segment will look at how Apple's new mobile silicon strategy differed from its rivals, right from the start.